Using hand/finger gesture controlled technology to enhance the learning experience of chemistry students.

Developing a new application to build and interact with chemistry models will enhance the learning experience and enrich learning resources available for students. LeapMotion is an emerging interactive technology that maps where your fingers are in front of a computer will enable students to use natural gestures to interact with molecules. The integrated experience between the new technology and teaching materials will enhance the student’s visuospatial ability and thus will improve the overall learning outcome for the student. This project will also enhance an understanding of the development and implementation of a new technology into a curriculum aiding in the knowledge base so that other staff will be able to do this more efficiently. Future cohorts of students studying chemistry across the university will then use the new technology and associated teaching materials generated.

Super High Definition Visual Analytics System (TS-VAS system)

The TS-VAS system is an interactive visualisation system that is capable of rendering 3D contents at a very high display resolution (4K). The system is equipped with state-of-art interactive devices such as high precision head tracking system, fine hand/finger tracking system, RGBD body gesture tracking, Facial recognition, and Speech recognition. With these interactive capabilities, the system will allow users to interact with ultra high-resolution 3D content. No only with the visualisation and high-resolution display capability, the system will equip customized data analysis capability to suit different research purposes.

The TS-VAS system is a tool that can facilitate cross-discipline collaboration with other CIs in Arts, AMC, Health Sciences as well as projects with UTAS strategic development such as Asian Institute and Sense-T program and external organization such as Tourism Northern Tasmania and Launceston City Council. Within the Internet of Things theme, the TS-VAS system is the central communication space where other placed around Tasmania can be linked up to. This will make possible a variety of real-time visualisation applications such as exploration of Sense-T / climate change data, natural disaster management and clinical emergency simulation and inside 3D digital art.

Encouraging Understanding of Natural Resource Using Emergent Technology

The hypothesis investigated was that a tangible multi-touch table interface encouraged understanding of natural resource issues using map-based constructivist learning tasks. The natural resource issue of Preparing for bushfire was chosen to test this hypothesis. It addressed key problems such as the low inclination by residents to prepare for bushfire. The system design and content evolved from participatory involvement of three bushfire community groups.

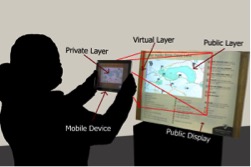

3D Mobile Interactions with Public Displays

The use and prevalence of public displays has grown over the past decade, however achieving user engagement is one of the main difficulties of public display interactions. Collaboration among viewers enables users to engage more with public displays, but creating an environment where collaboration can happen is difficult. The reasons for this are various, for example the openness of the environment, the different types of users around the display and the tools users have available to interact with them. A user interface that solves some of these problems can engage user with public display more by creating new ways to collaborate around them. In this paper we evaluate a 3D mobile interaction that creates a collaborative ambient by letting users position 3D content outside the display using natural and humane skill. In order to better understand the relationship between giving each user a private and unique view of the content and increasing collaboration around public displays we conducted one usability test. In total 40 participants, divided in groups of two, solved a real world scenario using either the proposed 3D mobile interaction or a traditional public display. In this paper we present the results of this usability test.

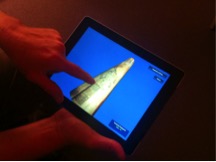

A Hands-On, Mobile Approach to Collaborative Exploration and Discussion of Virtual Museum Artefacts

Many of the objects and artefacts that make up museum collections suffer from problems of limited access, usually caused either by their fragility, the conditions they are displayed under, or their physical location. This is problematic in museum learning contexts, where these limits make touching, handling or passing a given object around during collaborative discussions impossible. Interacting with 3D, virtual representations of museum artefacts is a potential solution, but such experiences typically lack the participatory, tactile qualities that make artefacts so engaging.

In this work, we describe the design of a tablet-based interactive system for collaborative exploration and discussion of virtual museum artefacts. Our contextual evaluation of this system provides evidence that hands-on, reality-based interaction with a tablet interface offers a significantly more engaging way to collaboratively explore virtual content than more traditional, desktop-based interaction styles, and provides an experience much more akin to that of handling real, physical objects.

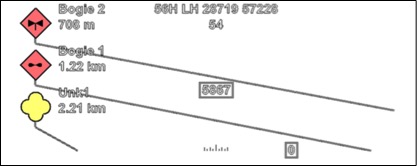

Hardware and software optimization of a night fighting and situational awareness system

The project will identify potential solutions to hardware and software issues associated with a night fighting and situational awareness system being developed by Rockwell Collins Australia Pty Ltd (RCA). RCA had identified three issues of importance prior to the workshop including: optimisation of hardware/software for real-time video display to operator; optimisation of graphical user interface; and optimisation of hardware enclosure.

Effective Use of Mobile, Wearable, and Ubiquitous Displays in Teaching and Learning Environments

Multiple-display technology has been successfully used in many different applications, ranging from command and control, vehicular and CAD design, scientific visualisation, education and training, immersive applications, and public information displays. There are many reasons why their use is successful in these contexts. They provide increased situational awareness; collaborative decision-making capability; capability to visualise and manage large amounts of data simultaneously; the ability to interact with data at varying levels of detail and on different (and even orthogonal) dimensions; and the capability for enhanced learning environments for classrooms. Yet their use in education for enhancing methods of teaching and learning is still in its infancy, and many challenges are yet to be overcome in these environments.

This project lies at the intersect between ICT and education, and combines multimodal learning and cognitive load theory to investigate techniques in which mobile, wearable, and ubiquitous display technologies can be successfully employed to enhance teaching and learning practices.

Higher-order Learning Tools for Teaching and Learning

If universities are to survive, they must look to the quality and relevance of their teaching activities in ways that they never have before. Concept maps are a graphical tool for organizing and representing knowledge. Strengths of the concept mapping technique are that it requires students to organize knowledge in a new way, articulate relationships between concepts, and promote the integration of new knowledge with previously learned knowledge. When compared with more traditional techniques such as reading, attending lectures, and note taking, concept mapping has been shown to have many benefits.

In this project, we investigate the benefits that the integration of higher-order learning tools like concept maps can provide to students for organizing their thoughts and reinforcing their learnings of ICT units. In this project, prototype systems are designed and created, and HCI studies are undertaken.